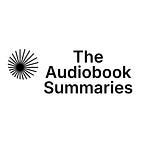

Amir Husain

See how human–machine fusion is redefining freedom, power, and identity

What if the most powerful force shaping your future weren’t politics, economics, or even biology – but code?

From the apps on your phone to the systems guiding aircraft and moving financial markets, software has outgrown its role as a tool. It has become the invisible architecture of life, amplifying human intent into global action. A tweak in an algorithm can redirect fortunes, reshape public opinion, or rewire daily habits.

Trillions of devices now sense, decide, and act in unison with us. We live inside a cybernetic society – a fusion of human and machine, biology and computation. The promise is immense: cognitive cities that adapt to their citizens, exoskeletons that extend physical endurance, and networks that let communities govern themselves. But the perils are just as stark: privacy collapse, algorithmic control, and autonomous weapons accelerating conflict.

Amir Husain’s The Cybernetic Society explores these tensions. His central claim is simple yet unsettling: the future will be determined not by whether humans or machines “win,” but by how we design the feedback systems binding us together.

The cybernetic age begins

The roots of cybernetics stretch back to the mid-20th century. Norbert Wiener, influenced by Arturo Rosenblueth, argued that no system exists in isolation. Humans and machines form feedback loops, where decisions flow back and forth.

That vision now defines everyday reality. Financial markets move at machine speed; social platforms turn private gestures into systemic political waves. Algorithms no longer just predict outcomes – they produce them.

Change arrives in bursts. Neural networks sat on academic shelves for decades before exploding into everyday use once computing power scaled. Social platforms remained fringe curiosities until reaching adoption thresholds, then cascaded into global cultural forces.

The same reflexivity reshapes organizations and cities. Firms increasingly behave like organisms, sensing and responding through continuous data loops. Predictive maintenance, AI logistics, and adaptive platforms replace static hierarchies.

Cities, too, embody this vision. Neom, Saudi Arabia’s planned $500-billion “cognitive city,” is designed to sense, learn, and adapt in real time. Its transport, energy, and governance systems are bound by AI loops. Whether this intelligence empowers citizens or enforces control depends on design.

The lesson is stark: feedback systems are double-edged. They can amplify freedom and resilience – or concentrate power and coercion.

Cybernetics, society, and power

Smart cities symbolize both utopia and trap. Optimized energy use, predictive safety, and seamless mobility sound liberating. Yet the same infrastructure can enforce constant surveillance.

Beneath these experiments lies the gravitational pull of the cloud. Amazon already pairs 1.5 million employees with 750,000 robots, its logistics directed by algorithms. Its cloud division – along with Microsoft and Google – underpins much of the global economy. These backbones centralize data and power at unprecedented scale.

Once built, infrastructures rarely pivot. Motorola’s Iridium satellite system collapsed under sunk costs, unable to adapt. The same risk faces cognitive cities and mega-platforms: efficiency today hardens into constraint tomorrow.

Infrastructure is never neutral. It encodes values, decides who controls data, and locks in trajectories. Economist George Gilder notes that capitalism thrives on surprise – unexpected jolts of innovation. Cybernetic platforms determine whether those surprises will flourish or be suppressed.

Power is no longer merely political or economic. It is infrastructural, baked into the code and feedback loops that govern daily life.

Human augmentation and hyperwar

Cybernetics is not just external. It is seeping into our bodies and decisions.

Picture taking an exam through a brain–computer interface: the headset monitors your attention, adjusts the challenge, and adapts in real time. Neural activity becomes both input and output.

Technologies range from cheap EEG caps to invasive cortical implants. Early experiments already translate neural activity into speech or restore motion. Firms like Neuralink and Paradromics push forward, promising medical breakthroughs – but also raising questions of safety, access, and identity.

Augmentation expands further. Exoskeletons reduce fatigue for soldiers and workers. Military prototypes such as TALOS in the US or Rostec in Russia aim to extend endurance, raising ethical dilemmas around responsibility and inequality.

On the battlefield, cybernetics accelerates into hyperwar. Drones, loitering munitions, and autonomous swarms compress the OODA loop – observe, orient, decide, act – into seconds. Conflicts in Ukraine and Gaza already showcase machine-driven warfare. DARPA, China, and Russia are racing to automate combat at scale.

Official principles emphasize “responsibility” and “traceability,” but in practice, the momentum is toward autonomy. The very feedback loops that adapt a student’s exam are also directing weapons.

The line between augmentation and automation blurs. Cybernetic systems extend capacity – but also shift accountability. Who is responsible when decisions emerge at machine speed?

Cycles and traps

Cybernetics doesn’t erase history. It accelerates its cycles.

Peter Turchin’s field of cliodynamics argues that societies rise and fall in recurring waves of prosperity and unrest. Inequality, elite overproduction, and fiscal strain drive instability. His “Political Stress Index” warns when turbulence looms.

Technology adds fuel. It produces more elites than positions of power, accelerates inequality, and magnifies unrest. Turchin argues the United States shows signs of entering such a disruptive phase – echoing the declines of earlier empires.

Even outside historical cycles, citizens face another trap: privacy collapse. We are already “opted in.” Keystrokes can be decoded acoustically, GPS traces sold, and cloud files retained long after deletion. Apps map movements more precisely than governments. Even analog escape – the Kremlin’s return to typewriters after Snowden – proves futile when side-channel leaks persist.

The combined effect is stark: recurring cycles of unrest now intersect with infrastructures of surveillance. Opting out is no longer realistic. The question becomes how to design counter-systems that restore agency.

The technologies of freedom

Not all feedbacks entrench control. Some point toward freedom.

Tim Berners-Lee’s Solid project imagines giving every person a private data pod, granting control over who can access what. Community networks like NYC Mesh connect neighbors through rooftop antennas, resilient without telecom monopolies. Self-hosted services like Nextcloud let individuals manage email and storage on their own machines.

Even AI can follow this path. Federated learning trains models on your device while keeping data local. Google’s Gboard already does this for text prediction. Security researchers, meanwhile, prepare for quantum-era threats with algorithms like Kyber and Dilithium, designed to withstand code-breaking supercomputers.

Identity and governance are being reimagined through decentralized identifiers, verifiable credentials, and blockchain-based organizations where communities vote and act transparently. Energy experiments like the Brooklyn Microgrid show how neighbors can trade electricity directly, turning infrastructure into a commons.

Skills matter as much as tools. Literacy campaigns from the Philippines to Singapore emphasize privacy and security as civic competencies. In Lahore, the MinusFifteen Project links sensors, mesh networks, and blockchain to cool neighborhoods by collective action.

None of these projects guarantee liberation. But they sketch a direction: technologies designed to be steered by communities, not imposed upon them.

Closing words

Husain’s argument is clear: the future is not a contest between humans and machines. It is about how feedback systems are designed, governed, and distributed.

Every layer of society – finance, cities, warfare, identity – is becoming cybernetic. The same loops that adapt supply chains also guide drone swarms. The same architectures that collapse privacy can empower communities.

History reminds us that inequality and unrest follow recurring patterns. Technology reminds us that opting out is no longer an option. But the technologies of freedom point to a path forward: infrastructures that decentralize power, reward transparency, and extend human agency.

The cybernetic society is already here. Its trajectory is not fixed. The decisive question is whether it will deepen dependence – or open space for freedom and responsibility.

About the author

Amir Husain is an entrepreneur, AI technologist, and inventor with more than 30 patents. He is the founder of SparkCognition and author of The Sentient Machine, Generative Art, and Generative AI for Leaders. He also co-authored Hyperwar, exploring the military consequences of autonomous systems.